Becoming an AI-Enhanced Engineer

Or: reflections and workflows from an engineer learning to build with AI

The last couple of months have been the most fun and energized I have felt at work during my entire time there, and I have been in my current company for almost five years. It’s been so fun that I sometimes did long after work sessions of building and learning after putting my two very young kids to sleep. And believe me, doing any work after getting toddlers to bed takes a lot of energy so one needs to do something that fills them with energy and excitement to keep going. The energy came from working with the new AI paradigm that is shaping our field and It’s now clear to me that everything we know about programming and building software is changing. AI is going to change how we plan, design, and deliver software.

This post is my humble attempt to put into words all my thoughts and learnings with building with and for AI. It’s also a way for me to share a few personal views on AI tooling infrastructure and what tech companies should do to innovate faster.

I also hope it inspires more engineers to try the internal GenAI tools. Some of them take a bit of upfront time to learn, but the payoff is real and it compounds fast.

Now, let’s dive right in.

What’s changing?

So what exactly is changing that is worth paying attention to? Most of you have probably heard the now common phrase, often from big tech CEOs, that AI is going to take our jobs and will soon be better at writing software than most of us. I only partly agree with the second part, but I also can’t fully ignore the first.

What I believe more and more is this: if our jobs ever do get replaced, it will not be by AI agents or assistants. It’ll be that engineers who use AI well will raise the bar, and those who can’t keep up may fall behind

These AI-enhanced engineers understand the AI tools, have their workflows and know when to use them, and how to use them to get more done. Compared to engineers who do not use AI tools for learning or writing code, AI-enhanced engineers can get a lot more done in the same amount of time. That kind of output gives any tech company a reason to push their teams to use AI for engineering productivity.

But, frankly, the reason to use these tools should not be fear of being replaced. That is not a strong motivator for most of us, including me. The better reason is to rethink how we spend our time at work. It is a chance to cut down the kind of work that’s manual and inefficient so we can move on to high levels of work that’s more fulfilling for us and matters more.

A new category of builders are emerging

As AI models keep improving fast and code becomes easier and cheaper to produce, a new category of software builders is starting to form. These builders will likely shape how we build software in the future, moving us from traditional software practices toward more AI-driven ones.

I now see two types of software engineers that are growing inside tech companies:

AI engineers sit somewhere between traditional software engineers and ML engineers. They don’t train models from scratch, but they build on top of LLMs, agents, and the growing set of AI tools. They know how to take an intelligent model and turn it into real features and products. As foundational models keep getting better, I expect more tech companies will start hiring more of these engineers. They’ll be the ones who can spot good use cases where LLMs can raise the value of what we ship.

AI-enhanced engineers are software engineers who use AI tools to level up their work. They might not be building agents yet, but they know how to automate tasks, move faster, and save time with GenAI tools. They know how to prompt, how to get unstuck, and how to find answers faster with help from AI.

Both roles matter, and both are going to grow. AI engineering may become its own job title, but AI-enhanced engineering is something any developer can start doing today. This post is my way of sharing what I’ve learned using GenAI tools and offering a few ideas for how others can begin their own path into AI-enhanced engineering.

But what is there to build?

Ok, let’s say we all become AI-enhanced engineers. Now what? What do we build that’s going to be different from before?

Over the past years, we’ve built many great products and services without using AI that bring joy to many people. That won’t change anytime soon. But with AI models getting increasingly smarter at planning and coding, we are opening the door to new opportunities that could significantly change how we build and operate our tools and services. But how?

I believe that instead of working directly with code as the main artifact and writing most of it ourselves, we will begin to delegate that work to smart AI agents. These agents will help us write, test, and run code on our behalf. That doesn’t mean we’ll run out of things to do. As humans, we’re good at finding new ways to create value, and I believe that will stay true for software engineers as well. But our work will shift. We’ll spend more time reviewing and verifying code, creating new abstractions like agents, and finding ways to put them to work across many of our manual and inefficient workflows.

I was thinking the other day that it might even feel a bit like babysitting toddlers—keeping them busy with tasks they enjoy while guiding them safely and helping them learn along the way.

To make this shift happen, we’ll need to build agent-friendly infrastructure. It needs to offer the core building blocks to support this new way of working. That won’t be easy, but I think it’s an exciting challenge that’s worth solving.

To enable this agentic infrastructure, tech companies will need to support the following building blocks:

Model Intelligence

Models like Claude Sonnet 4.0, OpenAI o3, and Gemini Pro 2.5 are essential for agentic capabilities. We need access to the latest state-of-the-art large language models. The engineering teams need access to these models as soon as they’re available from providers like OpenAI, Anthropic, and Google. This near-instant access is critical for developers, since each new model often brings a step-function improvement in code generation, reasoning, and tool use.

Memory and Context Management

Context management is an important but difficult problem when building AI products. Progress across the industry has been slower than expected, and even the latest models (like Sonnet 4.0 or Opus 4.0) still struggle with large contexts. Most support around 200K tokens, with Gemini Pro 2.5 going up to ~1M, but performance tends to drop as the context grows.

This is a real challenge for working with large codebases, which many of us do daily. The good news is there are workarounds like vector stores that use RAG-based techniques to help expand context effectively.

As a side note, I sometimes worry these models are only really good at small-context programming (like IOI-style problems). The big question is: how far can we push their usefulness into areas that need deeper reasoning and harder forms of validation beyond code?

MCPs and Tools

An agent is an LLM with access to tools. Tools give agents the ability to act outside of their own context window. This access is managed through a standard protocol called MCPs (Model Context Protocol).

I believe tech companies should keep investing here, especially to make it easier to build and use MCPs across teams—with a strong focus on good user experience.

Agent Development Kit

An Agent Development Kit (ADK) is a set of tools that helps engineers build and manage AI agents more easily. Think of it like this: just like we use SDKs to build apps, we’ll use ADKs to build smart agents that can reason, act, and help with tasks.

Without an ADK, everyone will build agents in different ways. That makes it harder to scale. We need to standardize how agents are created, tested, and managed. I think this is a critical area we need to invest in and get right from early on.

I hope by this point in the post, I’ve convinced you that the future is bright—and that there’s a lot of exciting work ahead, both with AI and for AI. Now I’ll try to close the loop by showing how I use GenAI tools to be more productive, and maybe inspire you to try them too. Let’s go!

My agentic coding workflows

One caveat: I expect some of these workflows will age quickly. The pace of innovation in AI is extremely fast, so what worked a month ago might already feel outdated. That’s why I’m focusing on concepts that I believe have a better chance of lasting. Most of the tools I mention below have great documentation, tutorials, and YouTube videos you can check out if you want to go deeper.

Project Planning & Management

As much as we enjoy writing code, we all end up doing some form of project management—whether it's understanding requirements or simply communicating with others. In the agentic coding world, where code generation is heavily commoditized, we’ll spend more time on planning. I’ve found that the more time I spend creating a clear and detailed spec for what I want to build, the better the model performs when generating code. Sometimes, if the plan is solid, the model gets it right on the first try. There’s less boilerplate and more clarity in the model’s decisions.

I’ve spent hours, and sometimes even days, just planning a feature before sending it to the model. For me, this is the most important step in the build cycle. I always include screenshots or UI mockups if they help explain what I’m asking the model to do.

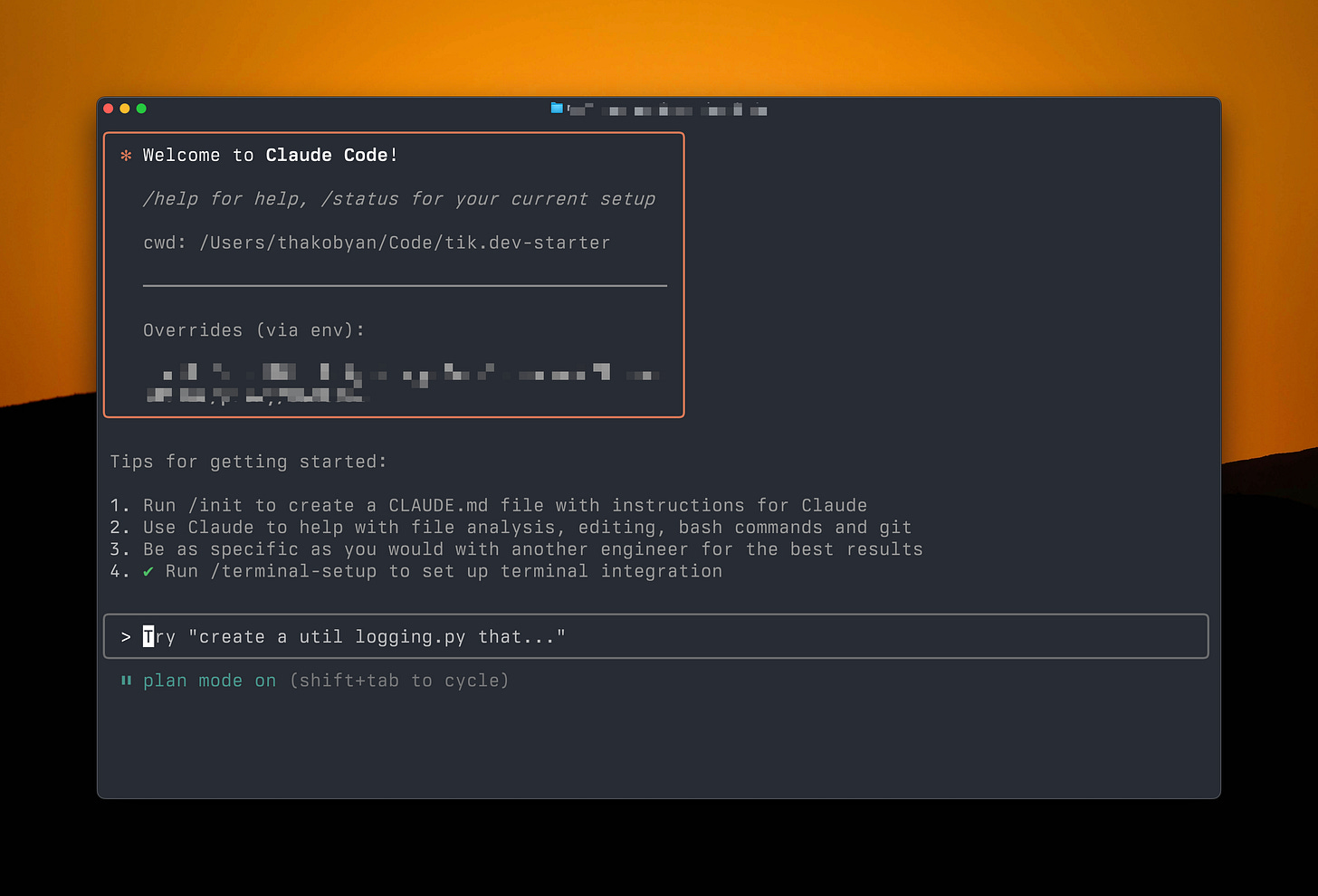

The good news is that agents can help with planning too. You can ask them to generate a plan for the feature you’re building. I’ve found that Claude Code, especially when using the Opus 4 reasoning model, is really good at this. I recommend using Claude Code’s “plan mode,” which lets it generate a plan you can review and refine before making any code changes. You probably don’t need this for small one-off changes, but it’s extremely useful for larger features or full projects.

Here’s a video I recorded where I ask Claude Code to generate a plan for a hypothetical project in one of the services my team owns. It does a good job on the first try. You can then ask it to follow the plan, or you can take that draft and refine it into something better.

There are also prompt-driven workflows where the model builds the plan step by step and asks you questions along the way. I’ve used one like that below, and it works well for more complex features.

Code Context:

<Paste Code Here>

Problem Statement:

[Describe your specific problem or feature request here]

Prompt:

I need help implementing a solution for the problem described above. Please conduct a systematic requirements gathering by asking me targeted questions one at a time.

Structure your questions to progressively build understanding across these areas:

- Current user experience and expected behavior

- Technical implementation approach and constraints

- Integration points with existing systems

- Data flow and relevant mechanisms

- Performance considerations and edge cases

- Dependencies and compatibility requirements

- Testing and validation strategy

Ask only one focused question at a time, building each subsequent question on my previous answers. Start with understanding the current situation and what specific outcome would be most valuable.

Begin when ready.When I need to include code context (see <Past Code Here> part in the prompt), especially large codebases, I use two tools like Repo Prompt and Repomix to combine files into a format that fits within a model’s context window. These tools let you turn a codebase into a LLM-friendly file, which is super helpful. If you’re new to Repo Prompt, I recommend starting with their tutorial video. It’s great. Both tools can be run locally so no company specific code gets uploaded to any server.

If part of your job involves building design mocks to get feedback from customers, I’ve found tools like v0 or Lovable to be very useful. They let you quickly prototype your ideas with an interactive UI, instead of relying on Figma to create static artifacts.

To wrap this section because this post gets too long: I believe systems thinking is becoming a critical skill for software developers in the age of AI. Understanding the big picture of what you’re building, how the parts connect, and being able to clearly explain that to an AI coding agent is now a key part of the job and is not going to go away in the future.

Cursor

When I talk to folks about Cursor, I feel like Steve Ballmer in his famous “Developer, Developer, Developer” chant from the early 2000s—but instead I’m chanting “Cursor, Cursor, Cursor.” I think it’s because I really like it.

Cursor is an agentic code editor and a fork of VSCode. At first glance, it looks just like VSCode. But its agentic UX and multi-file tab completion make it much more compelling. If you already use VSCode, you can jump right in with the same key shortcuts and plugins—nothing changes there. It’s like switching to a new Chromium browser if you’re already a Chrome user. Cursor makes you productive without any initial setup as its defaults are usually enough for you to start coding and feel productive.

My Cursor setup is pretty basic. The main differentiator is knowing which model to use for which task.

I rely heavily on a few models when using Cursor:

O3 / Gemini Pro 2.5 / Opus 4 (Max mode) for very complex reasoning tasks or asking hard questions in “Ask” mode.

Claude Sonnet 4.0 for most other code tasks. If I run into a loop where Sonnet underperforms, I’ll switch to one of the reasoning models above.

I also use tagging (with @) for files or directories a lot when giving instructions to the model. I create a new agent chat window often to not overwhelm the context window and when the model starts hallucinating. I found it’s usually a lot easier to start from scratch than instruct the model to fix the changes it made.

When building UIs, I depend on the Playwright MCP server to let the model self-correct when the UI doesn’t appear where it should. Cursor now makes it easy to add MCPs thanks to their 1.0 release.

What really sets Cursor apart is the Cursor Tab. It’s their built-in autocomplete feature, powered by a custom model trained by the Cursor team. I find it more powerful than GitHub Copilot. While Copilot can only add text at your cursor, Cursor Tab can also change and delete code around it. It even supports multi-file suggestions.

Another thing I think makes Cursor unique is how well it understands the codebase. It feels like it “knows” your project better than most tools. I believe that’s because it computes embeddings for each file and uses that to give more accurate responses.

One caveat: Cursor still has limited support for JVM-based languages like Java or Scala, which my team uses. So when I work on Java codebases, I use Cursor alongside IntelliJ. Cursor becomes my agentic interface, and I use IntelliJ to run and test the code. It’s not ideal, but it works fine.

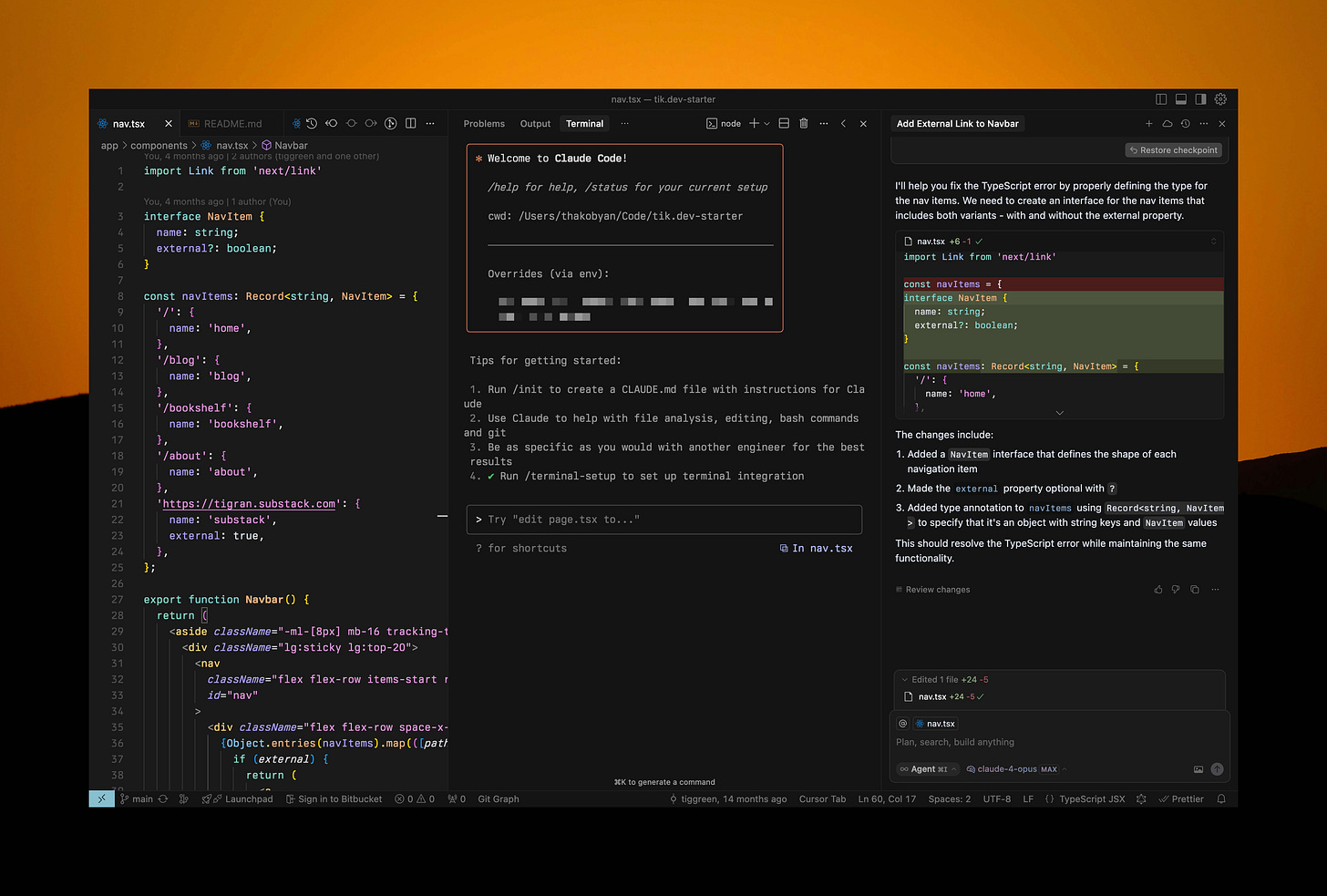

Claude Code (30 min video on how to use it)

Claude Code is a terminal-based agentic coding tool. I started experimenting with it right after it was announced . At first, I wasn’t too impressed, but after using it for a couple of weeks, I completely changed my view. It’s now one of the best agentic coding tools out there—if not the best—once it clicks.

I never thought I’d rely on the terminal to do real coding work. I’ve always been more of an IDE power user, with all my workflows and shortcuts set up there. But using CC (short for Claude Code) feels very different. I think it has to do with the natural language interface and the fact that you’re talking directly to the agent inside the terminal, where all your CLI tools are also available. You can ask CC to use any CLI tool installed on your system as part of a workflow you describe.

When compared to other agentic coding tools, I find Claude Code is the most thoughtful when planning and the most tasteful when executing. It feels like a real coworker who wants to get the job done with minimal changes, instead of dumping code. It’s more minimalist than most tools I’ve used.

Since it runs in the terminal, CC works with any IDE. My current setup is using it inside Cursor, as a separate agent from Cursor’s own. CC is aware of Cursor’s UI—so when you highlight a chunk of code, it automatically includes it in context, or just picks up the file you have open.

You can also extend CC with custom commands. I haven’t explored this much yet, but I can see it becoming a core part of team workflows where you have a company specific workflows already set up for all engineers to use. Imagine having a custom command that pulls a JIRA ticket, helps you address it, and opens a PR with the changes—all from the terminal.

It’s also great for asking questions about your codebase. It uses agentic search to explore the code, which can be slow, but often gets things right on the first try. CC knows when to switch between fast and reasoning models depending on the task. You can also ask CC to “think hard” or “think harder”, and it will do exactly that for your prompt. Anthropic tuned Claude Code well for their Sonnet and Opus models and you can feel it when using it. It’s just better.

You can run multiple CC agents at the same time. In fact, I often run two sessions open: one for writing code and one reviewing what the other wrote. You can even run a third session to address comments from the second one. It keeps going, you get the idea.

CC supports a file called CLAUDE.md. This file gets pulled into context automatically at the start of a session. You can include useful info like common bash commands, testing instructions, style guides, or workflows. You can ask CC to create it for you with the /init command. I’ve added my own standard workflow there, and it’s become a core part of how I code.

There are a lot of great resources on how to use Claude Code effectively. My favorite is Claude Code: Best practices for agentic coding.

Finally, using Claude Code and it being so good made me think more about the future of computer interfaces. For the first time, I’ve started wondering if IDEs really have long-term staying power, or if all of them are going to evolve and look like modern terminals now. Terminals are already well optimized for long-running tasks, which is exactly what coding agents need. Terminals don’t have UIs, but do agents really need UI to operate them? Anyway, I might be wrong but here are some ideas for you to think about.

Agentic coding is evolving fast, but one thing is clear: integrating agents into our development process can unlock next level productivity gains. I encourage you to experiment with these tools and techniques yourself. While the tools will keep changing, many of the core principles will remain the same.

In the hands of skilled engineers who don’t just “vibe code,” but understand AI and software architecture deeply, and can guide a system toward a clear product goal, these tools make it possible to build software with unmatched speed and efficiency.

Current challenges when building with LLMs

Like with any new technology, adopting AI tools to build systems comes with its own set of challenges. This is not a full list, but the ones I’ve run into while building with these tools:

Cost will become a challenge.

I suspect the cost of agentic tools will become a problem. At some point, we’ll need to figure out how to scale this usage in a way that is sustainable and still brings real business value to a company.

Code changes still need a lot of human review.

Just because agents can write code doesn’t mean we can skip the human loop. The code still needs to be readable, correct, and safe to ship. When working on systems, especially those that are in the critical path, we can't rely on a “looks good enough” vibe. We need sophisticated review and testing infrastructure to ship confidently.

The real bottleneck is delivery.

As more of the coding shifts to agents, what might slow us down isn’t the coding part anymore but getting the code to production safely. We need CI/CD pipelines with merge queues that can validate changes made by agents with a high degree of confidence.

Taste in design and code style matters more.

The models can write working code, but they don’t yet have a strong sense of coding style, UX or design taste. You notice this especially in big codebases, where readability and consistency matter a lot. Without strong prompting, agents will often ignore your existing coding standards and conventions. That could create a technical debt. I’ve found myself spending a lot of time tweaking prompts just to keep the output aligned with how we already write code. I heard the Cursor team say this is a top problem they’re trying to solve, and I can see why.

Programming language choice makes a difference.

The quality of the output really depends on how much training data the model has seen for a given language. In my experience, Python, Java, and TypeScript perform best, they feel smooth to work with, and the agent understands idioms and libraries well. With Scala, things feel rougher. The completions are often less accurate, and I find myself needing to intervene more. If agents are becoming part of the team, language choice starts to matter in new ways.

Non-deterministic behavior is a major challenge.

LLMs don’t always produce the same answer twice. That makes it harder to debug, measure quality, and track improvements over time. You can’t just test once and trust it. You need observability tools that help you see what’s going on and compare runs over time. For me, Braintrust was critical when building AI tools. It helped me track failures, analyze changes, and make sense of why the Assistants API was behaving a certain way. Without good evals and observability, it’s hard to build anything reliable on top of platform tools.

Isn’t this amazing?

We’re living through a moment where the way we build software is being reinvented in front of our eyes. We get to learn, play, build, and shape what comes next—not years from now, but today. That feels rare. I truly believe the golden years of software development are still ahead of us.

Thanks for reading.